An object in motion.

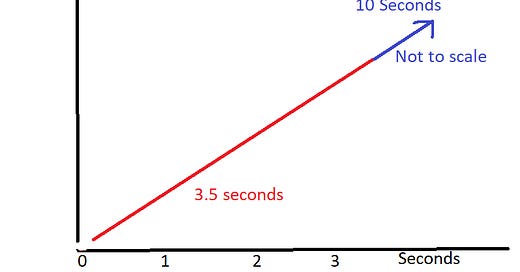

Forecasting the path of an object in motion requires understanding physics: gravity, wind resistance, friction, acceleration. You don’t just need to understand what these variables are, you must understand the interplay between them. Understanding this interplay precisely is what allows us to send astronauts to the space station. With that in mind let’s do a thought experiment. Consider the following image:

An object has been traveling along the same path for 3.5 seconds. What is the probability that it will continue along that same path for an additional 10 seconds?

This question is impossible to answer without knowing the physical properties of the object and its location (for purposes of calculating resistance, gravity, friction, etc). Is the object a bowling ball being rolled down a freshly waxed alley at one mile per hour? Is the object a paper airplane being thrown off a balcony on a windy day?

You could begin taking educated guesses if you were given more information. For example: the object is not aerodynamic; the object is traveling at 3 miles per hour; the object is on the ground. But you won’t be able to reach near 100% certainty without knowing almost exactly what each of the variables are.

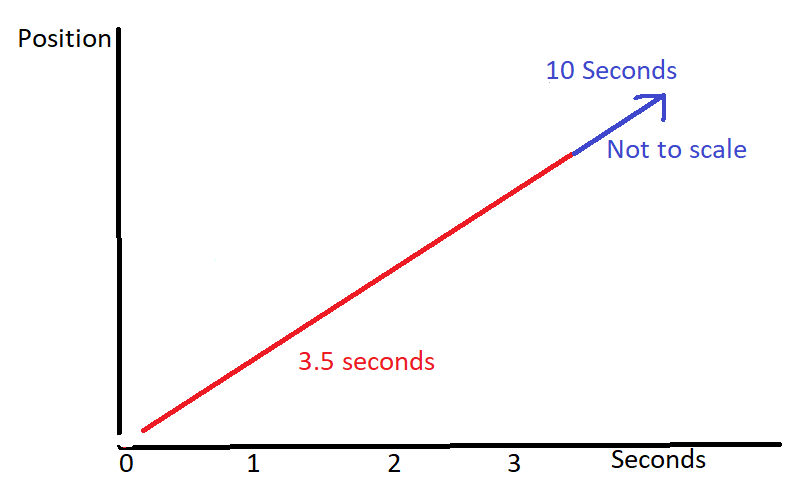

Now consider this image:

An object has been traveling along the same path for 2,500 years. What is the probability that it will continue along the same path for an additional 10 years?

The answer is near 100%. By knowing that the trend has been in place for 2,500 years all other variables become irrelevant. There are things we can infer about this object. It is in space. It is far enough away from large objects that it is not experiencing a gravitational pull. It is not likely to be in an area densely populated by asteroids. But these inferences are purely for entertainment. We can answer the question with a high degree of certainty just by knowing how long the trend has been in place.

In the rest of this post I’m going to explain why progress is more like the second example than the first. It is a powerful trend that started billions of years ago and hasn’t looked back since.

Begs the question, what is progress?

The dictionary definition of progress is: forward or onward movement toward a destination. I believe that the most relevant and all encompassing “destination” for Earthlings is and will remain “more information” at least for as long as humans exist and likely long after. Information has been increasing exponentially for some 3-4 billion years. So…

Progress = more information.

The exponential growth of information began with life. Life is a form of information and life began 3-4 billion years ago. Specifically, it all started with the formation of anaerobic (don’t need oxygen) heterotrophic bacteria. Heterotrophs get their food from the environment. Animals and humans are heterotrophs because they eat other animals or plants to get energy. These bacteria were the very first living organisms.

Heterotrophic bacteria replicated themselves many trillions of times until eventually one of them made a fortunate error. It replicated itself perfectly imperfectly. Instead of breeding another heterotrophic bacteria it bred an autotrophic bacteria. Autotrophs can make their own food, for example through photosynthesis. Plants and trees are mostly autotrophs (hat tip to the most famous exception - the carnivorous Venus Flytrap). Natural selection “decided” to keep autotrophic bacteria, thereby marking the first tiny bit of evolution.

Quick aside: An accidental byproduct of this new feeding mechanism was the oxygenation of Earth’s atmosphere (photosynthesis generates oxygen). This is the coolest example of the Butterfly Effect I have ever run across. One imperfect replication by a single-celled bacteria billions of years ago is responsible for life as we know it.

Until humans came along information only grew through reproduction and evolution. Two velociraptors have more information than one, but a bird that evolved from a velociraptor contributes far more to total information than does another velociraptor.

Modern Homo Sapiens evolved around 300,000 years ago, but it wasn’t until the invention of language (50,000 to 150,000 years ago) that humans became the primary driver of information growth.

Sticks and stones were humans’ first “tools”. Then came fire. Then came language. Language enabled collaboration on a far greater scale than had been possible.

Most animals communicate in some way. Bees do a dance to tell other bees where to find nectar. Monkeys have one call that says: “Look out above!” which they use to warn fellow monkeys of an approaching eagle. They have another call that says: “Look out below!” which they use to warn others about a lion or jaguar. But only humans can say: “I ran across a berry patch on the other side of the river, just next to the tall tree that was struck by lightning, but a tiger was nearby so we shouldn’t go pick them for a few hours.” (I’m paraphrasing an example from the book Sapiens, which I highly recommend).

In this post I’m going to assume language started 70,000 years ago because it won’t change the takeaways of our argument and that’s the year my population data started.

Language turned each human experience into a resource that could be multiplied through communication. It started the flywheel of human progress. That flywheel has only accelerated ever since.

In the era of humans progress has been driven by population growth and improving tools. For most of the past 70,000 years population growth had the greater impact. Today tools have the bigger impact. We’ll dive into each in a second, but first I want to make one final point about information growth: it’s underlying cause is natural selection.

The driver of 99.9% of information growth today is humanities’ obsession with innovation, and I don’t just mean advanced scientific research and development efforts at companies like SpaceX. Humanities’ obsession with innovation is - like all human traits - derived from evolution. Humans who decided to use sticks and stones (innovators) survived better than humans who didn’t. Humans who farmed were able to sustain far higher population densities and hence proliferated to the point of sending hunter gatherers to extinction. In this sense, we can consider the human desire to innovate as being equivalent to any other type of evolution attained through natural selection. Life (information) wants to sustain itself. Natural selection ensures that life will not sustain itself by treading water. It must innovate to survive.

Human Experienced Years (EYs for short)

All people are either innovating themselves or creating demand for innovation.

Babies create demand for innovations in healthier formula, more absorbent nappies and mechanical rockers that soothe colic. Retirees create demand for innovation in healthcare that will enable them to be mobile for longer and to pick up their grandchildren in their 80s. Children in their mid 20s who have yet to leave the nest create demand for innovation around entertainment. An engineer working on cutting edge application-specific-integrated-circuits is innovating directly.

For this reason I think it’s fair to characterize the totality of human time as a resource that is fueling progress. This is a simple yet powerful heuristic.

Let’s do some math.

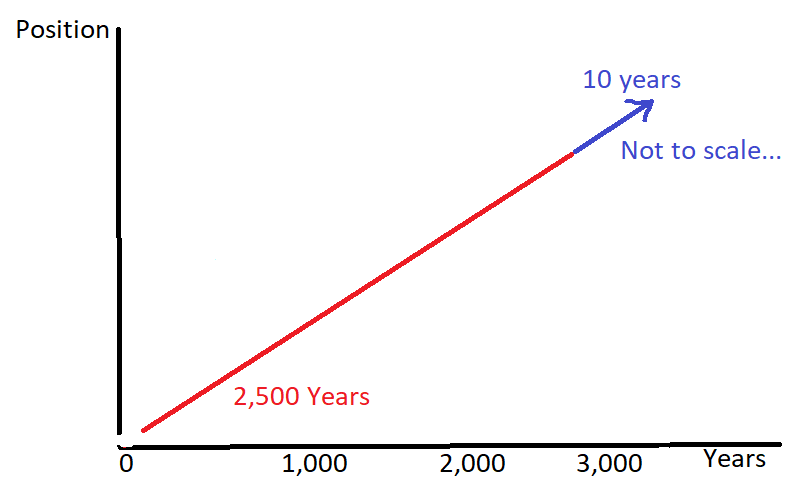

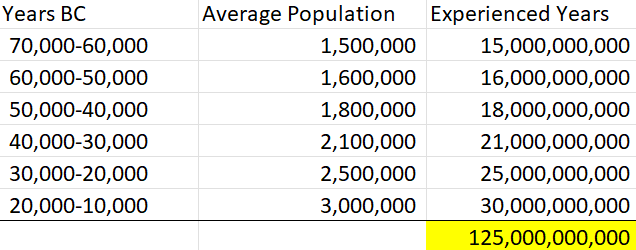

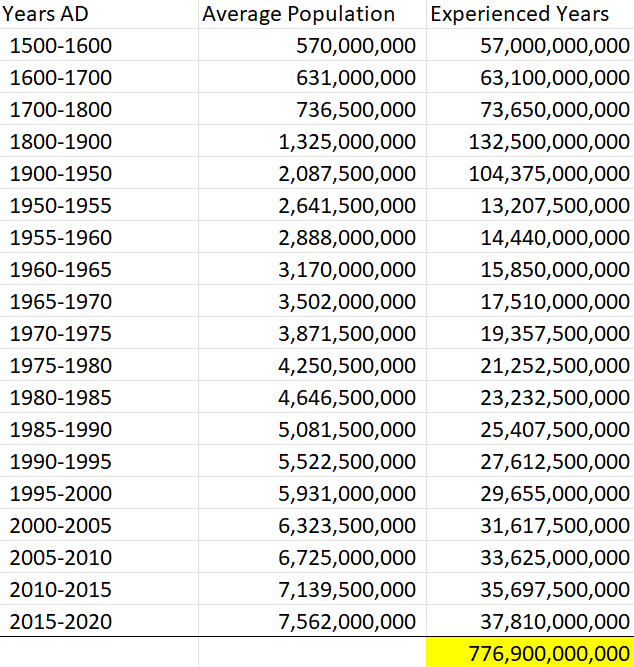

Today there are 7.75 billion humans on Earth. That means over the course of one year humanity will collectively experience 7.75 billion years (EYs for short). I looked up estimates for the historical population of Earth by year so that we can look at EY growth over time.

From 70,000 BC through 10,000 BC the human population expanded from around 1.5 million to about 5 million. I multiplied the estimated average population during the period by the number of years (10,000) in each period to calculate total years. The result is below.

We are currently generating 7.75 billion EYs per year. This is more than half of the total number of EYs humanity generated in the first 10,000 year period. In sixteen years, humanity will generate as many EYs as it initially generated over 60,000 years. For reference here are some of the key innovations during the period.

First known art (sculptures and cave paintings): 70,000 years ago

Sewing needles: 50,000

Deep sea fishing (boats/fishing line/etc): 42,000

Long-term human settlements: 40,000-30,000

Ritual ceremonies (and by inference, religion): 40,000-20,000

Ovens: 29,000

Pottery used to make figurines: 28,000-24,000

Harpoons and saws: 28,000-20,000

Fibers used to make clothes and baskets: 26,000

Pottery used for cooking and storage: 20,000-19,000

Prehistoric warfare using weapons (14,000-12,000)

Domesticated sheep: 13,000-11,000

Farming: 12,000 (10,000 BC)

Here is the same calculation for EY growth from 5,0000 BC to 0 AD.

Over this 5,000 year period humans had nearly twice as much time as they did in the previous 60,000 year period.

For reference here are some of the key innovations during the period.

Copper smelting

Seawalls

Cotton thread

Fired bricks

Plumbing

The Wheel

Silk

Wine

Papyrus (used for writing)

Musical notation

Written language

Glass

Rubber

Concrete

Iron

Distillation (perfumery)

Crossbows

Universities

Steel

Loans

Lighthouses

Cranes

Catapults

Scythes

Horseshoes

Checks

Potassium nitrate

Canals

Water wheels

The concept of “News”

Here is the same calculation for EYs from 1500 to the present.

Over this 520 year period humans had nearly 4 times the EYs as they did in the period 5,000 years from 5,000 BC to 0 AD.

For reference here are some of the key innovations during the period.

Rifles

Printing press

Floating dry docks

Telescopes

Newspapers

Microscopes

Slide rules

Mechanical calculators

Barometers

Vacuum pumps

Piston engines

Looms

Stoves

Lightning rods

Refrigeration

Steam engines

Carbonation

Steam powered vehicles

Weighing scales

Machine tools

Air compressors

Sewing machines

Telegraph

Cotton gin

Hydraulic press

Hot air balloons

Parachutes

Plywood

Vaccines

Morphine

Internal combustion engines

Wristwatch

Morse Code

Batteries

Trains

Cars

Flush toilets

Color photography

Plastic

Rechargeable batteries

Pasteurization

Dynamite

Barbed wire

Stainless steel

Metal detectors

Telephone

Lightbulb

Wind turbines

Ballpoint pins

Cardboard

Electricity

Appliances

Zeppelins

Airplanes

Penicillin

Television

Electron Microscope

Synthetic fiber

Nuclear fission

Ballistic missiles/rockets

Atomic bomb

Hard disk drive

Personal computers

Satellites

Integrated Circuits

Lasers

Spaceflight

Microprocessors

Space station

Video games

Global Positioning Systems

Flash memory

Digital media players

Cell phones

DNA profiling

Lithium-ion batteries

The World Wide Web

Bluetooth

I’m going to stop there for the sake of brevity and because most of the notable inventions since have taken place during the lifetimes of anyone reading this.

The takeaway is this: as time passes humanity has managed to fit more innovation into a shorter window. This is in part because there are more humans and hence more EY's during which people can think about ways to improve things. This is also because tools free up human time, which has the same effect.

Tools

For our purposes I’m going to consider anything humans create as a “tool”. Art and ritual ceremonies are both tools because they help to build culture, which in turn enables humans to act cohesively in larger groups, and to share resources and information. Language and education are also tools, just like pottery and harpoons.

The cool thing about tools is they improve over time. This is because tools are made with tools. Better tools enable humans to make yet better tools in a virtuous cycle. Let’s do some extrapolating.

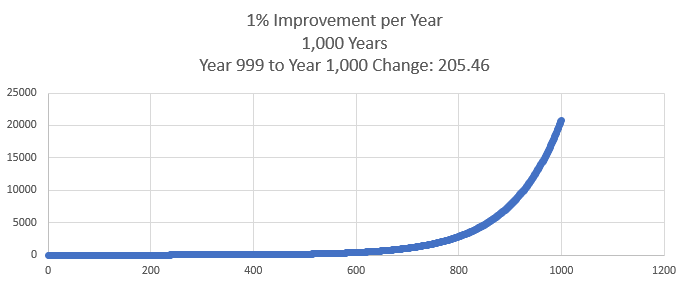

In year 1 we’ll consider humans as having 1 unit of information. The tools available in year 1 allow humans to increase the amount of novel information (NI for short) by 1% per year. Novel information would include a new or improved tool, but it would not include the duplication of an existing tool. Using one hammer as a model to create a hammer exactly like it would not count as NI. Making a new hammer with an improved design would. 1% growth per year in new information doesn’t seem outlandish. What does that growth rate lead to over a period of 1,000 years?

In year 1 we have 1 unit of NI. In year 2 we have 1.01 units of NI. In year 10 we have 1.094 (1.01 X 1.01 X 1.01 done 9 times) units of NI. But right around year 600 things start to take off.

From year 600 to year 610 we have 41 units of growth.

From year 700 to year 710 we have 110 units of growth.

From year 999 to year 1000 we have 205.46 units of growth…

What’s more, by year 1,000 we have 20,751.64 units of NI. Remember we just started with 1.

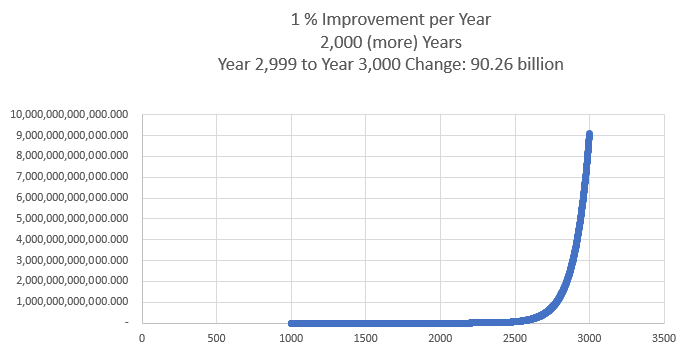

What about the next 2,000 years? This is where it really starts to get gnarly.

From year 1,000 to 1,010 we have 2,171 units of growth.

From year 1,500 to 1,510 we have 314,313 units of growth.

From year 2,000 to 2,010 we have 45,504,016 units of growth.

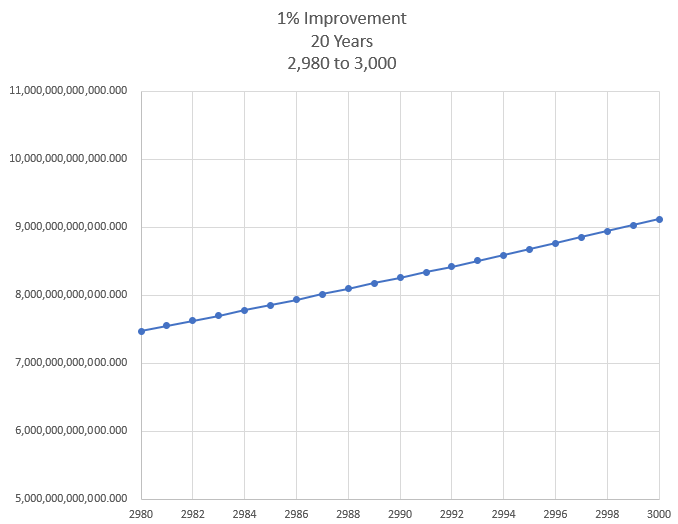

From year 2,999 to 3,000 we have 90,256,523,294 units of growth.

We end with a total resource pool of more than 9.1 trillion units of NI.

The thing is, humans don’t live for thousands of years. We live for about 80 right now, and can’t easily remember what life was like much more than 20 years ago. Exponential growth over shorter periods of time is like a chameleon blending into the jungle of progress.

Most people know we’re progressing, but the increasing rate of change remains hidden.

1 EY today is not equal to 1 EY yesterday

Tools are useful in two ways. First, they improve productivity. A farmer could plant his crop by hand, but it will take far less time if he’s using a John Deere. Second, tools make new things possible. Humans wouldn’t be able to build sky scrapers or decode the human genome without modern tools. Let’s look at the history of farming productivity to give us an idea about how impactful tools can be over time.

83% of Americans worked on farms in the year 1800. Today, that figure is around 1.4%. I don’t have the global figures but let’s assume they’re approximately the same. Let’s further assume that 50% of the population is considered “in the workforce” in both periods (i.e. not retired, not a child, not a homemaker, etc). We can then calculate the total number of hours worked per year to provide food for the human population in the year 1800 and compare that to today.

1800

Population: 1,000,000,000

Workforce: 500,000,000

Workers employed on farms: 500,000,000 X .83 = 415,000,000

Hours per work year: 2,000

Hours worked on farms: 830,000,000,000 (830 billion)

If there was no improvement in farming productivity then the calculation for 2022 would be…

Population: 7,775,000,000

Workforce: 3,887,500,000

Workers employed on farms: 3,887,500,000 X .83 = 3,226,625,000

Hours per work year: 2,000

Hours worked on farms: 6,453,250,000,000 (6.45 trillion)

However, thanks to productivity only 1.4% of people work on farms. Let’s do the calculation again to see how much time humanity has saved.

Workforce: 3,887,500,000

Workers employed on farms: 3,887,500,000 X .014 = 54,425,000

Hours worked on farms: 54,425,000 X 2,000 = 108,850,000,000 (108.85 billion)

To summarize, humans are spending 721.5 billion less hours working on farms than they did in the year 1800, but managing to feed an additional 6.75 billion people. Put another way, humans have 6.34 trillion (6.45 trillion less 109 billion) additional hours to spend working on something other than farming every single year. Here’s yet another way to look at it. The average human lives 80 years, which is: 80 X 365 X 24 = 700,800 hours. Hence, the 6.34 trillion hours saved represent [6.34 trillion divided by 700,800] = 9,053,582 human lifetimes - per year.

Household appliances - enabled by cheap electricity - are another interesting example with eye-catching numbers. The average family of four saved 40 hours per week after gaining access to dishwashers, dryers, washing machines, vacuums, etc. 10 hours per person per week. The United States population today is 332 million. Hence, household appliances provide the United States alone 332 million X 10 X 52 = 172,640,000,000 (172.64 billion) hours per year of additional time compared to the alternate universe in which they were never invented. Doing the same calculation above, this equates to 246,347 lifetimes.

I’ll use the human genome project as my last example. Sequencing the first human genome took 13 years, 2,800 researchers, and cost $3 billion. Today a genome can be sequenced for less than $1,000 and it takes less than 24 hours. Better still, one person can sequence many genomes simultaneously if they have the machines for it.

Every hour freed up by improved tools is an hour that people can allocate to something else.

Artificial General Intelligence (AGI) - when tools can make tools

AGI is the ability of an intelligent agent to understand or learn any intellectual task that a human being can. I’m going to finish this post by pointing out something that may not be obvious, but which reinforces our definition: progress = information growth.

The largest source of information growth - by far - over the past twenty years has been picture and video data. There isn’t a close second. The largest contributor to picture and video data growth? Social media (I’m including YouTube under this umbrella). If you think that teenagers uploading silly videos of themselves to their Instagram account isn’t contributing to progress - think again. Even the comments on those videos and images are incredibly useful.

These images and videos create enormous demand for advancements in machine learning and neural networks. The same skills that are required to create Facebook and Google’s algorithms that recognize and take down violent, racist or pornographic content are also used to make algorithms that detect breast cancer and beta-amyloid proteins (connected to Alzheimer’s) on MRI scans before they would become visible to a radiologist’s human eye.

Humans are biological neural networks that learn by absorbing data. Baby’s learn to walk by watching other humans and trying it out themselves. Baby’s learn to talk by listening to other humans.

AGI will come about in much the same way, by showing computers billions of hours of video data, billions of images, and by having them read everything from scientific papers to the comments on a cat video.

When humans finally create artificial general intelligence (AGI), tools will start making better tools on their own. This is when some people think the technological singularity will start. Consider the following.

Once a human-equivalent brain has been encoded into a computer it can be replicated an infinite number of times, only being limited by the available compute resources. What’s more, it won’t take long until the computer-brain is functioning at the same level as the top human minds. Soon after it will surpass even the smartest human. The only limitation will be how quickly humans can build the infrastructure on which these brains will run. This is a long thesis for cloud and semiconductor businesses.

The demand for super-human AGI will obviously be enormous. We all watched how quickly a Covid vaccine was developed. Big demand makes for fast innovation. It’s obvious that if AGI is developed then supply will be the limiting factor. How fast might humanity - fueled by the inevitable arms race AGI will create - be able to increase our effective compute capacity?

There are three components:

Physical wafer manufacturing - how many of the silicone plates on which chips are etched we can build

Hardware design improvements - number of transistors, 3D stacking, etc

Software improvements

Let’s look at each.

Wafer growth is expected to reach near 9% this year, a year in which capex is at a record. Let’s be conservative and assume that the world can only build 10% new wafer capacity per year.

Hardware design improvements have been resulting in about 15% improvement in instructions per cycle/clock (IPC) per year. This is a decent heuristic for the contribution of design improvements. Let’s be conservative and say that improvements to hardware design can only improve compute by 10% per year.

I found many examples where software upgrades made by Nvidia, Cadence Design, Google, etc increased the performance of deep learning models by 3X to 50X over the past few years. At 3X we’re talking about 44% annual improvement. Let’s be conservative and say that 10% annual improvement from software is achievable on a long term basis.

Together, using very conservative estimates, it seems safe to assume that without the help of AGI humans can increase effective compute capacity by 30%+ per year. Once we have achieved AGI then #2 and #3 above will improve at rates far beyond what humans have been able to achieve. Let’s assume that the rate of growth of total effective compute capacity increases by 5% per year with the help of AGI. What does this look like over time?

Year 0, AGI is achieved: Base Capacity = 1

1 X 1.3 (30% improvement) = 1.3

1.3 X 1.35 (35% improvement) = 1.76

1.76 X 1.40 (40% improvement) = 2.46 (capacity has more than doubled

2.46 X 1.45 = 3.56

3.56 X 1.5 = 5.34 (5X capacity achieved in year 5)

5.34 X 1.55 = 8.28

8.28 X 1.6 = 13.25

13.25 X 1.65 = 21.87

21.87 X 1.7 = 37.17

37.17 X 1.75 = 65.06

Capacity increased 65X by year 10;. Continuing at this rate of growth, we’ll achieve 1,605X by year 15, and 73,457X by year 20.

Does this pass the sniff test? It’s not exactly an apples to apples comparison, but the most powerful super computer in 2000 had a peak speed of 7.23 teraflops. Today the most powerful super computer boasts a top speed of 1.1 exaflops. That’s an increase of 152,143X.

The companies that own data centers and the companies that build the chips will be the primary beneficiaries not just of AGI, but of the path we’re already on to it.

Long live the cloud, long live semiconductors.